The democratization of AI and use of LLM services such ChatGPT & Bard (now Gemini) in the enterprise use cases also pose significantly evolving risk to the organization. Without proper controls, any type of AI model can quickly generate compounding negative effects which may overshadow any positive gains from AI.

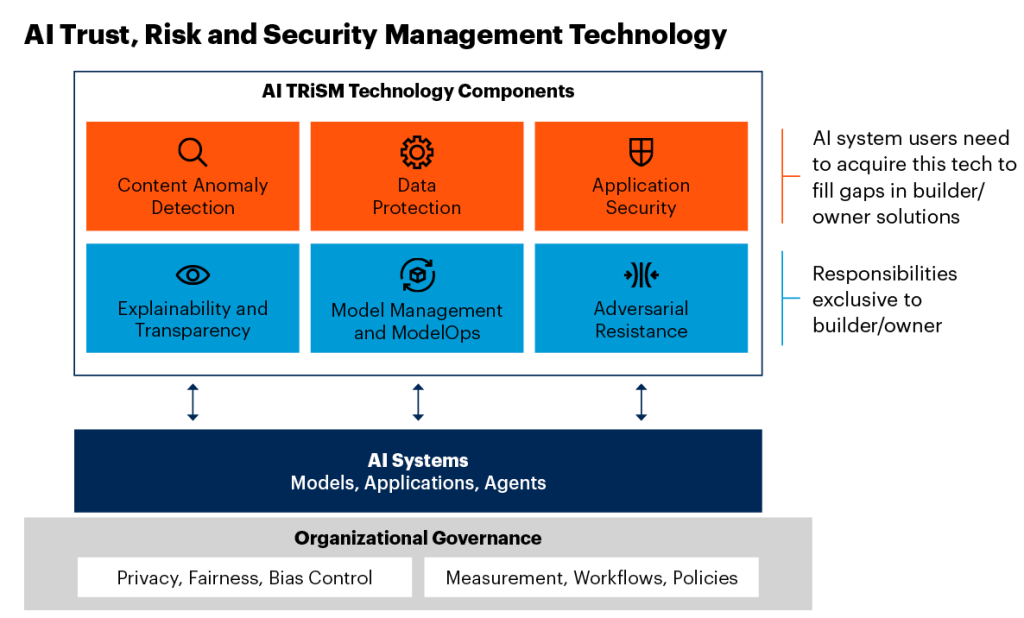

AI TRiSM(Trust, Risk and Security Management) is a framework that comprises a set of risk and security controls and trust enablers that helps enterprises govern and manage AI models and AI applications’ life cycle. It will also help organizations comply with upcoming regulations, such as the EUs AI Act.

By 2026, enterprises that apply TRiSM controls to AI applications will consume at least 50% less inaccurate or illegitimate information that leads to faulty decision making.

Sources: Adapted on Garner sources.

Also, check Splunk TRiSM framework.